6.7. Getting started with PyMC3¶

Based on a notebook by John Salvatier, Thomas V. Wiecki, Christopher Fonnesbeck from [https://docs.pymc.io/en/stable/pymc-examples/examples/getting_started.html].

Overview of PyMC3¶

From [https://docs.pymc.io/en/stable/about.html]:

PyMC3 is a probabilistic programming package for Python that allows users to fit Bayesian models using a variety of numerical methods, most notably Markov chain Monte Carlo (MCMC) and variational inference (VI). Its flexibility and extensibility make it applicable to a large suite of problems. Along with core model specification and fitting functionality, PyMC3 includes functionality for summarizing output and for model diagnostics.

Features of PyMC3¶

PyMC3is an open source Probabilistic Programming framework written in Python. It usesTheanoto calculate gradients it needs for HMC using automatic differentiation. “Probabilistic Programming allows for automatic Bayesian inference on user-defined probabilistic models.”PyMC3compiles probabilistic programs on-the-fly to C for increased speed.It allows models to be specified directly in Python (cf.

PyStan) with intuitive and readable syntax, that mimics the syntax used by statisticians.“It features next-generation Markov chain Monte Carlo (MCMC) sampling algorithms such as the No-U-Turn Sampler (NUTS; Hoffman, 2014), a self-tuning variant of Hamiltonian Monte Carlo (HMC; Duane, 1987). This class of samplers works well on high dimensional and complex posterior distributions and allows many complex models to be fit without specialized knowledge about fitting algorithms.”

NUTS has self-tuning strategies to set HMC tunable parameters adaptively.

About

Theano: “Theano is a library that allows expressions to be defined using generalized vector data structures called tensors, which are tightly integrated with the popular NumPyndarraydata structure, and similarly allow for broadcasting and advanced indexing, just asNumPyarrays do. Theano also automatically optimizes the likelihood’s computational graph for speed and provides simple GPU integration.”

A Motivating Example: Linear Regression¶

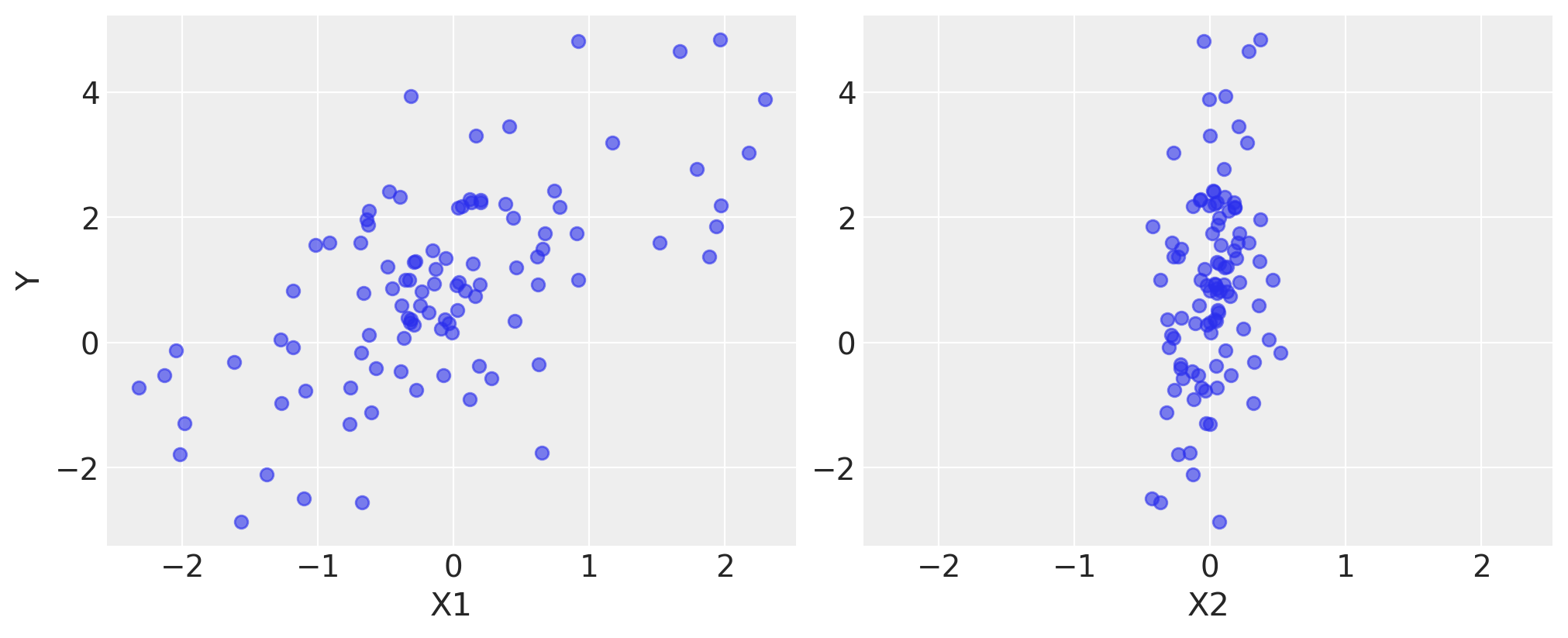

To introduce model definition, fitting and posterior analysis, we first consider a simple Bayesian linear regression model with normal priors for the parameters. We are interested in predicting outcomes \(Y\) as normally-distributed observations with an expected value \(\mu\) that is a linear function of two predictor variables, \(X_1\) and \(X_2\).

where \(\alpha\) is the intercept, and \(\beta_i\) is the coefficient for covariate \(X_i\), while \(\sigma\) represents the observation error. Since we are constructing a Bayesian model, we must assign a prior distribution to the unknown variables in the model. We choose zero-mean normal priors with variance of 100 for both regression coefficients, which corresponds to weak information regarding the true parameter values. We choose a half-normal distribution (normal distribution bounded at zero) as the prior for \(\sigma\).

Generating data¶

We can simulate some artificial data from this model using only NumPy’s random module, and then use PyMC3 to try to recover the corresponding parameters. We are intentionally generating the data to closely correspond the PyMC3 model structure.

import arviz as az

import matplotlib.pyplot as plt

import numpy as np

%config InlineBackend.figure_format = 'retina'

# Initialize random number generator

RANDOM_SEED = 8927

np.random.seed(RANDOM_SEED)

az.style.use("arviz-darkgrid")

# True parameter values

alpha, sigma = 1, 1

beta = [1, 2.5]

# Size of dataset

size = 100

# Predictor variable

X1 = np.random.randn(size)

X2 = np.random.randn(size) * 0.2

# Simulate outcome variable

Y = alpha + beta[0] * X1 + beta[1] * X2 + np.random.randn(size) * sigma

Here is what the simulated data look like.

fig, axes = plt.subplots(1, 2, sharex=True, figsize=(10, 4))

axes[0].scatter(X1, Y, alpha=0.6)

axes[1].scatter(X2, Y, alpha=0.6)

axes[0].set_ylabel("Y")

axes[0].set_xlabel("X1")

axes[1].set_xlabel("X2");

Model Specification¶

Specifying this model in PyMC3 is straightforward because the syntax is as close to the statistical notation. For the most part, each line of Python code corresponds to a line in the model notation above.

First, we import PyMC. We use the convention of importing it as pm.

import pymc3 as pm

print('Running on PyMC3 v{}'.format(pm.__version__))

Running on PyMC3 v3.11.2

Now we build our model, which we will present in full first, then explain each part line-by-line.

basic_model = pm.Model()

with basic_model:

# Priors for unknown model parameters

alpha = pm.Normal("alpha", mu=0, sigma=10)

beta = pm.Normal("beta", mu=0, sigma=10, shape=2)

sigma = pm.HalfNormal("sigma", sigma=1)

# Expected value of outcome

mu = alpha + beta[0] * X1 + beta[1] * X2

# Likelihood (sampling distribution) of observations

Y_obs = pm.Normal("Y_obs", mu=mu, sigma=sigma, observed=Y)

The first line,

basic_model = Model()

creates a new Model object which is a container for the model random variables.

Following instantiation of the model, the subsequent specification of the model components is performed inside a with statement:

with basic_model:

This creates a context manager, with our basic_model as the context, that includes all statements until the indented block ends. This means all PyMC3 objects introduced in the indented code block below the with statement are added to the model behind the scenes. Absent this context manager idiom, we would be forced to manually associate each of the variables with basic_model right after we create them. If you try to create a new random variable without a with model: statement, it will raise an error since there is no obvious model for the variable to be added to.

The first three statements in the context manager:

alpha = Normal('alpha', mu=0, sigma=10)

beta = Normal('beta', mu=0, sigma=10, shape=2)

sigma = HalfNormal('sigma', sigma=1)

create stochastic random variables with Normal prior distributions for the regression coefficients with a mean of 0 and standard deviation of 10, and a half-normal distribution for the standard deviation of the observations, \(\sigma\). These are stochastic because their values are partly determined by its parents in the dependency graph of random variables, which for priors are simple constants, and partly random (or stochastic).

We call the Normal constructor to create a random variable to use as a normal prior. The first argument is always the name of the random variable, which should almost always match the name of the Python variable being assigned to, since it is sometimes used to retrieve the variable from the model for summarizing output. The remaining required arguments for a stochastic object are the parameters, in this case mu, the mean, and sd, the standard deviation, which we assign hyperparameter values for the model. In general, a distribution’s parameters are values that determine the location, shape or scale of the random variable, depending on the parameterization of the distribution. Most commonly used distributions, such as Beta, Exponential, Categorical, Gamma, Binomial and many others, are available in PyMC3.

The beta variable has an additional shape argument to denote it as a vector-valued parameter of size 2. The shape argument is available for all distributions and specifies the length or shape of the random variable, but is optional for scalar variables, since it defaults to a value of one. It can be an integer, to specify an array, or a tuple, to specify a multidimensional array (e.g. shape=(5,7) makes random variable that takes on 5 by 7 matrix values).

Detailed notes about distributions, sampling methods and other PyMC3 functions are available in the API documentation.

Having defined the priors, the next statement creates the expected value mu of the outcomes, specifying the linear relationship:

mu = alpha + beta[0]*X1 + beta[1]*X2

This creates a deterministic random variable, which implies that its value is completely determined by its parents’ values. That is, there is no uncertainty beyond that which is inherent in the parents’ values. Here, mu is just the sum of the intercept alpha and the two products of the coefficients in beta and the predictor variables, whatever their values may be.

PyMC3 random variables and data can be arbitrarily added, subtracted, divided, multiplied together and indexed-into to create new random variables. This allows for great model expressivity. Many common mathematical functions like sum, sin, exp and linear algebra functions like dot (for inner product) and inv (for inverse) are also provided.

The final line of the model, defines Y_obs, the sampling distribution of the outcomes in the dataset.

Y_obs = Normal('Y_obs', mu=mu, sd=sigma, observed=Y)

This is a special case of a stochastic variable that we call an observed stochastic, and represents the data likelihood of the model. It is identical to a standard stochastic, except that its observed argument, which passes the data to the variable, indicates that the values for this variable were observed, and should not be changed by any fitting algorithm applied to the model. The data can be passed in the form of either a numpy.ndarray or pandas.DataFrame object.

Notice that, unlike for the priors of the model, the parameters for the normal distribution of Y_obs are not fixed values, but rather are the deterministic object mu and the stochastic sigma. This creates parent-child relationships between the likelihood and these two variables.

Model fitting¶

Having completely specified our model, the next step is to obtain posterior estimates for the unknown variables in the model. Ideally, we could calculate the posterior estimates analytically, but for most non-trivial models, this is not feasible. We will consider two approaches, whose appropriateness depends on the structure of the model and the goals of the analysis: finding the maximum a posteriori (MAP) point using optimization methods, and computing summaries based on samples drawn from the posterior distribution using Markov Chain Monte Carlo (MCMC) sampling methods.

Maximum a posteriori methods¶

The maximum a posteriori (MAP) estimate for a model, is the mode of the posterior distribution and is generally found using numerical optimization methods. This is often fast and easy to do, but only gives a point estimate for the parameters and can be biased if the mode isn’t representative of the distribution. PyMC3 provides this functionality with the find_MAP function.

Below we find the MAP for our original model. The MAP is returned as a parameter point, which is always represented by a Python dictionary of variable names to NumPy arrays of parameter values.

map_estimate = pm.find_MAP(model=basic_model)

map_estimate

{'alpha': array(0.95724679),

'beta': array([1.10071814, 2.9511438 ]),

'sigma_log__': array(0.03540151),

'sigma': array(1.0360356)}

By default, find_MAP uses the Broyden–Fletcher–Goldfarb–Shanno (BFGS) optimization algorithm to find the maximum of the log-posterior but also allows selection of other optimization algorithms from the scipy.optimize module. For example, below we use Powell’s method to find the MAP.

map_estimate = pm.find_MAP(model=basic_model, method='powell')

map_estimate

/Users/furnstah/opt/anaconda3/envs/8820-book-env-2/lib/python3.9/site-packages/scipy/optimize/_minimize.py:522: RuntimeWarning: Method powell does not use gradient information (jac).

warn('Method %s does not use gradient information (jac).' % method,

{'alpha': array(0.95835519),

'beta': array([1.1017629 , 2.95394057]),

'sigma_log__': array(0.03638187),

'sigma': array(1.03705179)}

It is important to note that the MAP estimate is not always reasonable, especially if the mode is at an extreme. This can be a subtle issue; with high dimensional posteriors, one can have areas of extremely high density but low total probability because the volume is very small. This will often occur in hierarchical models with the variance parameter for the random effect. If the individual group means are all the same, the posterior will have near infinite density if the scale parameter for the group means is almost zero, even though the probability of such a small scale parameter will be small since the group means must be extremely close together.

Most techniques for finding the MAP estimate also only find a local optimum (which is often good enough), but can fail badly for multimodal posteriors if the different modes are meaningfully different.

In summary, while PyMC3 provides the function find_MAP(), at this point mostly for historical reasons, this function is of little use in most scenarios. If you want a point estimate you should get it from the posterior. In the next section we will see how to get a posterior using sampling methods.

Sampling methods¶

Though finding the MAP is a fast and easy way of obtaining estimates of the unknown model parameters, it is limited because there is no associated estimate of uncertainty produced with the MAP estimates. Instead, a simulation-based approach such as Markov chain Monte Carlo (MCMC) can be used to obtain a Markov chain of values that, given the satisfaction of certain conditions, are indistinguishable from samples from the true posterior distribution.

To conduct MCMC sampling to generate posterior samples in PyMC3, we specify a step method object that corresponds to a particular MCMC algorithm, such as Metropolis, Slice sampling, or the No-U-Turn Sampler (NUTS). PyMC3’s step_methods submodule contains the following samplers: NUTS, Metropolis, Slice, HamiltonianMC, and BinaryMetropolis. These step methods can be assigned manually, or assigned automatically by PyMC3. Auto-assignment is based on the attributes of each variable in the model. In general:

Binary variables will be assigned to

BinaryMetropolisDiscrete variables will be assigned to

MetropolisContinuous variables will be assigned to

NUTS

Auto-assignment can be overriden for any subset of variables by specifying them manually prior to sampling.

Gradient-based sampling methods¶

PyMC3 has the standard sampling algorithms like adaptive Metropolis-Hastings and adaptive slice sampling, but PyMC3’s most capable step method is the No-U-Turn Sampler. NUTS is especially useful on models that have many continuous parameters, a situation where other MCMC algorithms work very slowly. It takes advantage of information about where regions of higher probability are, based on the gradient of the log posterior-density. This helps it achieve dramatically faster convergence on large problems than traditional sampling methods achieve. PyMC3 relies on Theano to analytically compute model gradients via automatic differentiation of the posterior density. NUTS also has several self-tuning strategies for adaptively setting the tunable parameters of Hamiltonian Monte Carlo. For random variables that are undifferentiable (namely, discrete variables) NUTS cannot be used, but it may still be used on the differentiable variables in a model that contains undifferentiable variables.

NUTS requires a scaling matrix parameter, which is analogous to the variance parameter for the jump proposal distribution in Metropolis-Hastings, although NUTS uses it somewhat differently. The matrix gives the rough shape of the distribution so that NUTS does not make jumps that are too large in some directions and too small in other directions. It is important to set this scaling parameter to a reasonable value to facilitate efficient sampling. This is especially true for models that have many unobserved stochastic random variables or models with highly non-normal posterior distributions. Poor scaling parameters will slow down NUTS significantly, sometimes almost stopping it completely. A reasonable starting point for sampling can also be important for efficient sampling, but not as often.

PyMC3 automatically initializes NUTS to reasonable values based on the variance of the samples obtained during a tuning phase. A little bit of noise is added to ensure different, parallel, chains start from different points. Also, PyMC3 will automatically assign an appropriate sampler if we don’t supply it via the step keyword argument (see below for an example of how to explicitly assign step methods).

with basic_model:

# draw 500 posterior samples

trace = pm.sample(500, return_inferencedata=False)

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [sigma, beta, alpha]

Sampling 4 chains for 1_000 tune and 500 draw iterations (4_000 + 2_000 draws total) took 15 seconds.

The sample function runs the step method(s) assigned (or passed) to it for the given number of iterations and returns a Trace object containing the samples collected, in the order they were collected. The trace object can be queried in a similar way to a dict containing a map from variable names to numpy.arrays. The first dimension of the array is the sampling index and the later dimensions match the shape of the variable. We can see the last 5 values for the alpha variable as follows:

trace['alpha'][-5:]

array([1.05903394, 1.00385661, 0.80612652, 0.63172247, 0.7096896 ])

If we wanted to use the slice sampling algorithm to sigma instead of NUTS (which was assigned automatically), we could have specified this as the step argument for sample.

with basic_model:

# instantiate sampler

step = pm.Slice()

# draw 5000 posterior samples

trace = pm.sample(5000, step=step, return_inferencedata=False)

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>Slice: [sigma]

>Slice: [beta]

>Slice: [alpha]

Sampling 4 chains for 1_000 tune and 5_000 draw iterations (4_000 + 20_000 draws total) took 23 seconds.

Posterior analysis¶

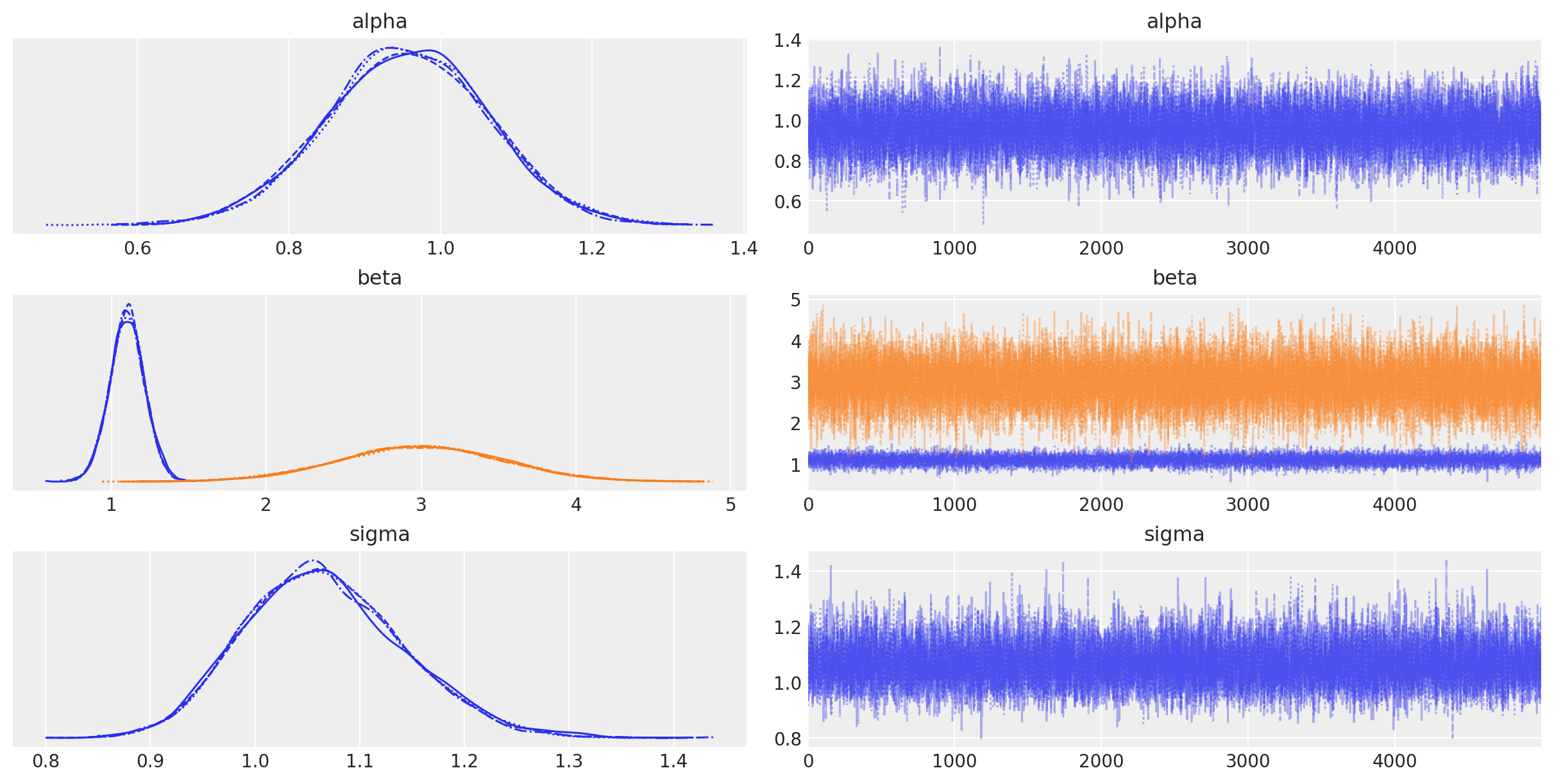

PyMC3’s plotting and diagnostics functionalities are now taken care of by a dedicated, platform-agnostic package named ArviZ. A simple posterior plot can be created using plot_trace.

with basic_model:

az.plot_trace(trace);

The left column consists of a smoothed histogram (using kernel density estimation) of the marginal posteriors of each stochastic random variable while the right column contains the samples of the Markov chain plotted in sequential order. The beta variable, being vector-valued, produces two histograms and two sample traces, corresponding to both predictor coefficients.

In addition, the summary function provides a text-based output of common posterior statistics:

with basic_model:

display(az.summary(trace, round_to=2))

| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| alpha | 0.96 | 0.11 | 0.76 | 1.17 | 0.0 | 0.0 | 19896.34 | 15334.42 | 1.0 |

| beta[0] | 1.10 | 0.11 | 0.88 | 1.31 | 0.0 | 0.0 | 17794.89 | 13792.12 | 1.0 |

| beta[1] | 2.99 | 0.53 | 2.02 | 4.02 | 0.0 | 0.0 | 16631.91 | 13246.66 | 1.0 |

| sigma | 1.07 | 0.08 | 0.93 | 1.21 | 0.0 | 0.0 | 17844.15 | 14443.55 | 1.0 |

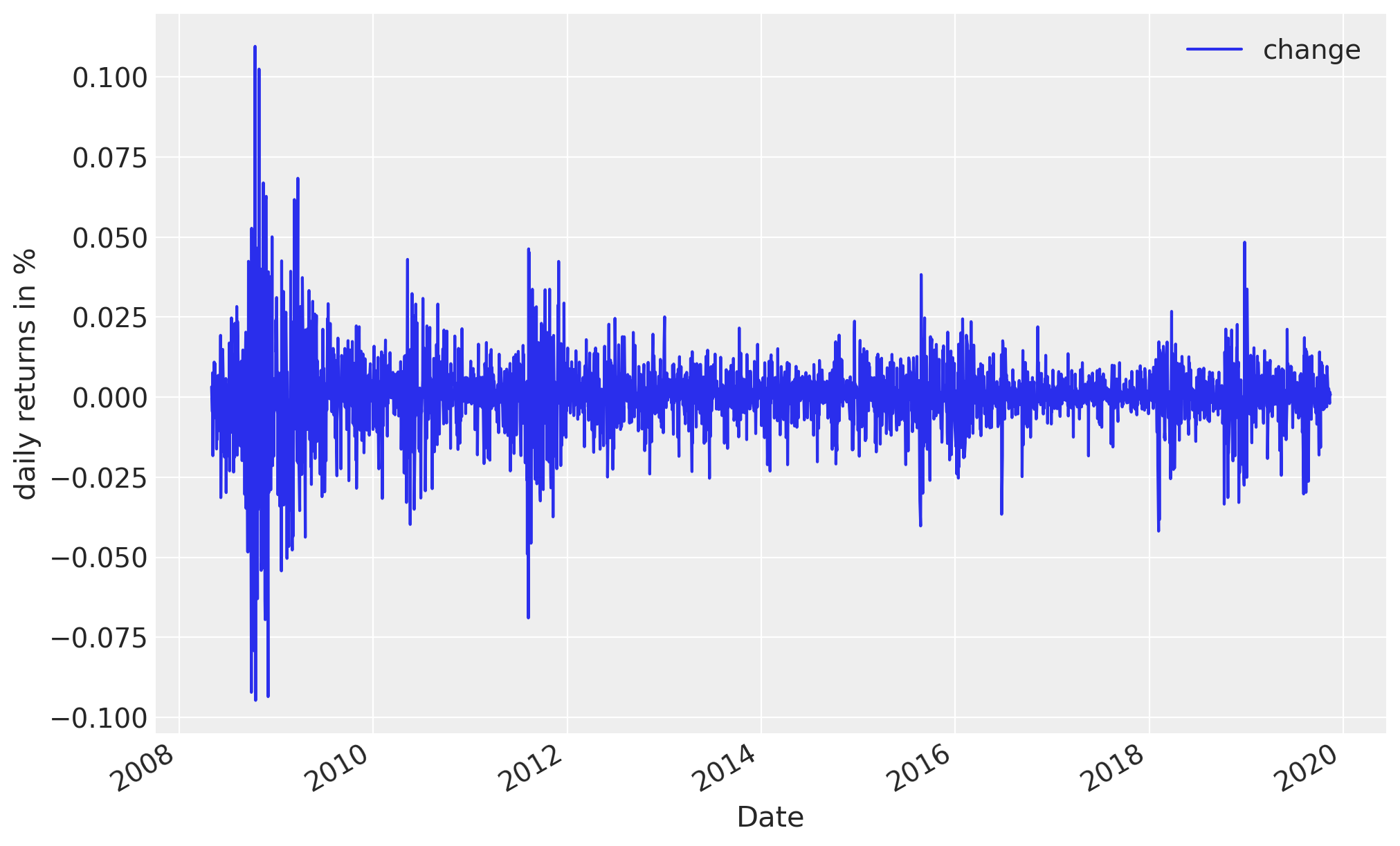

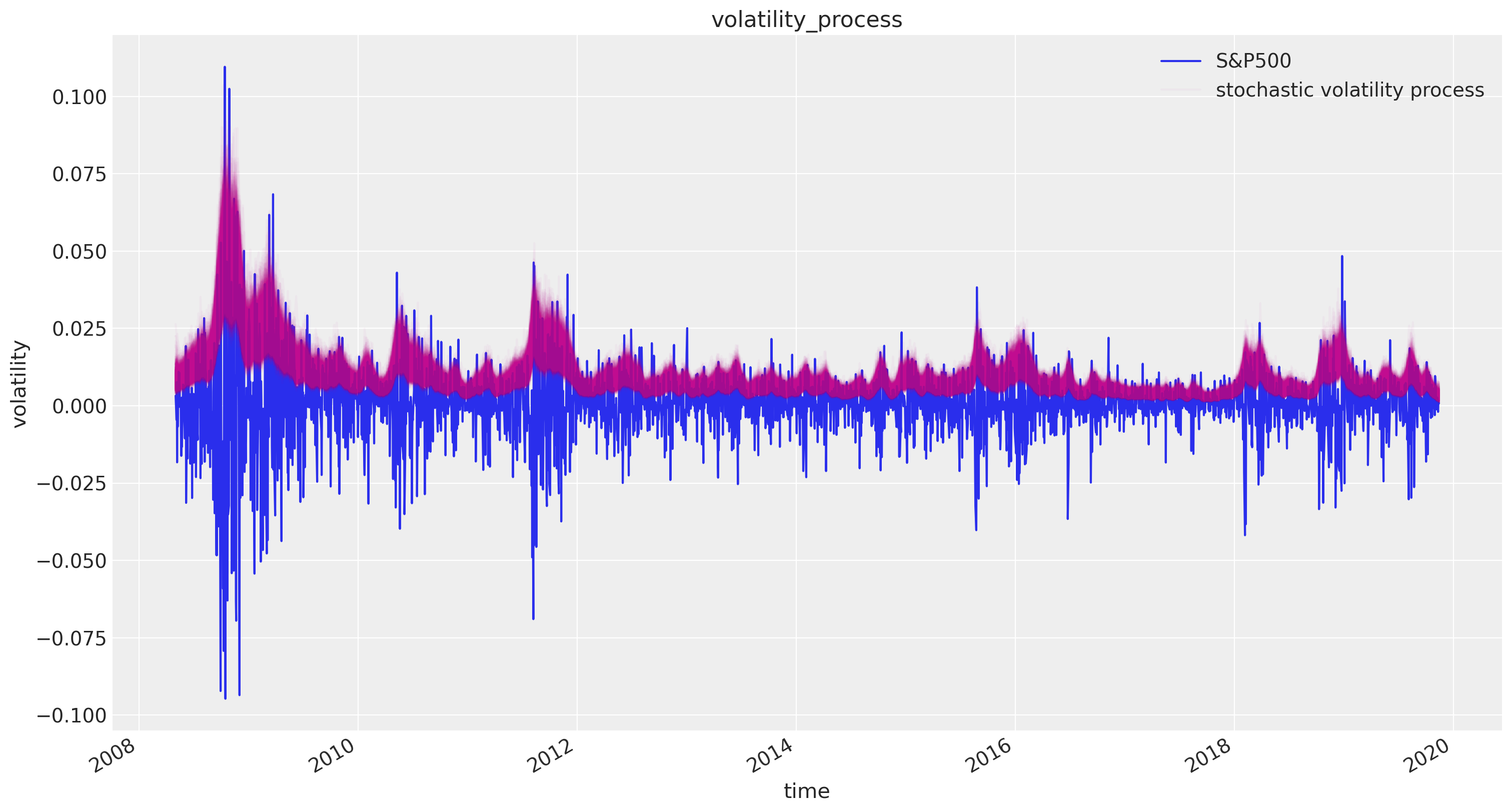

Case study 1: Stochastic volatility¶

We present a case study of stochastic volatility, time varying stock market volatility, to illustrate PyMC3’s use in addressing a more realistic problem. The distribution of market returns is highly non-normal, which makes sampling the volatilities significantly more difficult. This example has 400+ parameters so using common sampling algorithms like Metropolis-Hastings would get bogged down, generating highly autocorrelated samples. Instead, we use NUTS, which is dramatically more efficient.

The Model¶

Asset prices have time-varying volatility (variance of day over day returns). In some periods, returns are highly variable, while in others they are very stable. Stochastic volatility models address this with a latent volatility variable, which changes over time. The following model is similar to the one described in the NUTS paper (Hoffman 2014, p. 21).

Here, \(r\) is the daily return series which is modeled with a Student-t distribution with an unknown degrees of freedom parameter, and a scale parameter determined by a latent process \(s\). The individual \(s_i\) are the individual daily log volatilities in the latent log volatility process.

The Data¶

Our data consist of daily returns of the S&P 500 stock market index since the 2008 financial crisis:

import pandas as pd

returns = pd.read_csv(

pm.get_data("SP500.csv"), parse_dates=True, index_col=0, usecols=["Date", "change"]

)

len(returns)

2906

import warnings

with warnings.catch_warnings():

warnings.filterwarnings("ignore", category=UserWarning)

returns.plot(figsize=(10, 6))

plt.ylabel("daily returns in %");

Model Specification¶

As with the linear regression example, specifying the model in PyMC3 mirrors its statistical specification. This model employs several new distributions: the Exponential distribution for the \(\nu\) and \(\sigma\) priors, the Student-T (StudentT) distribution for distribution of returns, and the GaussianRandomWalk for the prior for the latent volatilities.

In PyMC3, variables with purely positive priors like Exponential are transformed with a log transform. This makes sampling more robust. Behind the scenes, a variable in the unconstrained space (named “variableName_log”) is added to the model for sampling. In this model this happens behind the scenes for both the degrees of freedom, nu, and the scale parameter for the volatility process, sigma, since they both have exponential priors. Variables with priors that constrain them on two sides, like Beta or Uniform, are also transformed to be unconstrained but with a log odds transform.

Although, unlike model specification in PyMC2, we do not typically provide starting points for variables at the model specification stage, we can also provide an initial value for any distribution (called a “test value”) using the testval argument. This overrides the default test value for the distribution (usually the mean, median or mode of the distribution), and is most often useful if some values are illegal and we want to ensure we select a legal one. The test values for the distributions are also used as a starting point for sampling and optimization by default, though this is easily overriden.

The vector of latent volatilities s is given a prior distribution by GaussianRandomWalk. As its name suggests GaussianRandomWalk is a vector valued distribution where the values of the vector form a random normal walk of length n, as specified by the shape argument. The scale of the innovations of the random walk, sigma, is specified in terms of the standard deviation of the normally distributed innovations and can be a scalar or vector.

We’ll also wrap our returns in PyMC’s ``Data container <https://docs.pymc.io/notebooks/data_container.html>__. That way, when building our model, we can specify the dimension names instead of specifying the shapes of those random variables as numbers. And we will let the model infer the coordinates of those random variables. This will make more sense when you look at the model, but we encourage you to take a look at the ArviZ quickstart. It defines dimensions and coordinates more clearly and explains their big benefits.

Let’s get started on our model now:

with pm.Model() as sp500_model:

# The model remembers the datetime index with the name 'date'

change_returns = pm.Data("returns", returns["change"], dims="date", export_index_as_coords=True)

nu = pm.Exponential("nu", 1 / 10.0, testval=5.0)

sigma = pm.Exponential("sigma", 2.0, testval=0.1)

# We can now figure out the shape of variables based on the

# index of the dataset

s = pm.GaussianRandomWalk("s", sigma=sigma, dims="date")

# instead of:

# s = pm.GaussianRandomWalk('s', sigma, shape=len(returns))

volatility_process = pm.Deterministic(

"volatility_process", pm.math.exp(-2 * s) ** 0.5, dims="date"

)

r = pm.StudentT("r", nu=nu, sigma=volatility_process, observed=change_returns, dims="date")

And we see that the model did remember the dims and coords we gave it:

sp500_model.RV_dims

{'returns': ('date',),

's': ('date',),

'volatility_process': ('date',),

'r': ('date',)}

sp500_model.coords

{'date': DatetimeIndex(['2008-05-02', '2008-05-05', '2008-05-06', '2008-05-07',

'2008-05-08', '2008-05-09', '2008-05-12', '2008-05-13',

'2008-05-14', '2008-05-15',

...

'2019-11-01', '2019-11-04', '2019-11-05', '2019-11-06',

'2019-11-07', '2019-11-08', '2019-11-11', '2019-11-12',

'2019-11-13', '2019-11-14'],

dtype='datetime64[ns]', name='Date', length=2906, freq=None)}

Notice that we transform the log volatility process s into the volatility process by exp(-2*s). Here, exp is a Theano function, rather than the corresponding function in NumPy; Theano provides a large subset of the mathematical functions that NumPy does.

Also note that we have declared the Model name sp500_model in the first occurrence of the context manager, rather than splitting it into two lines, as we did for the first example.

Fitting¶

with sp500_model:

trace = pm.sample(2000, init="adapt_diag", return_inferencedata=False)

Auto-assigning NUTS sampler...

Initializing NUTS using adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [s, sigma, nu]

Sampling 4 chains for 1_000 tune and 2_000 draw iterations (4_000 + 8_000 draws total) took 441 seconds.

0, dim: date, 2906 =? 2906

The rhat statistic is larger than 1.05 for some parameters. This indicates slight problems during sampling.

The estimated number of effective samples is smaller than 200 for some parameters.

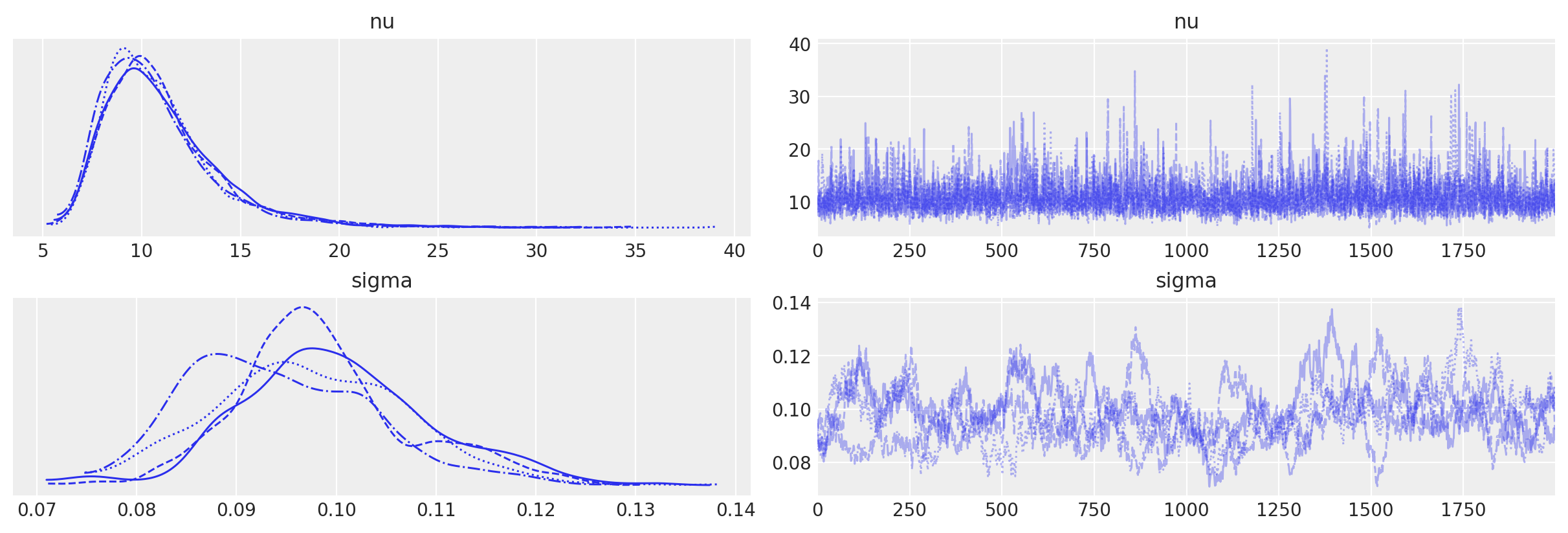

We can check our samples by looking at the traceplot for nu and sigma.

with sp500_model:

az.plot_trace(trace, var_names=["nu", "sigma"]);

0, dim: date, 2906 =? 2906

0, dim: date, 2906 =? 2906

Finally we plot the distribution of volatility paths by plotting many of our sampled volatility paths on the same graph. Each is rendered partially transparent (via the alpha argument in Matplotlib’s plot function) so the regions where many paths overlap are shaded more darkly.

fig, ax = plt.subplots(figsize=(15, 8))

returns.plot(ax=ax)

ax.plot(returns.index, 1 / np.exp(trace["s", ::5].T), "C3", alpha=0.03)

ax.set(title="volatility_process", xlabel="time", ylabel="volatility")

ax.legend(["S&P500", "stochastic volatility process"], loc="upper right");

As you can see, the model correctly infers the increase in volatility during the 2008 financial crash. Moreover, note that this model is quite complex because of its high dimensionality and dependency-structure in the random walk distribution. NUTS as implemented in PyMC3, however, correctly infers the posterior distribution with ease.

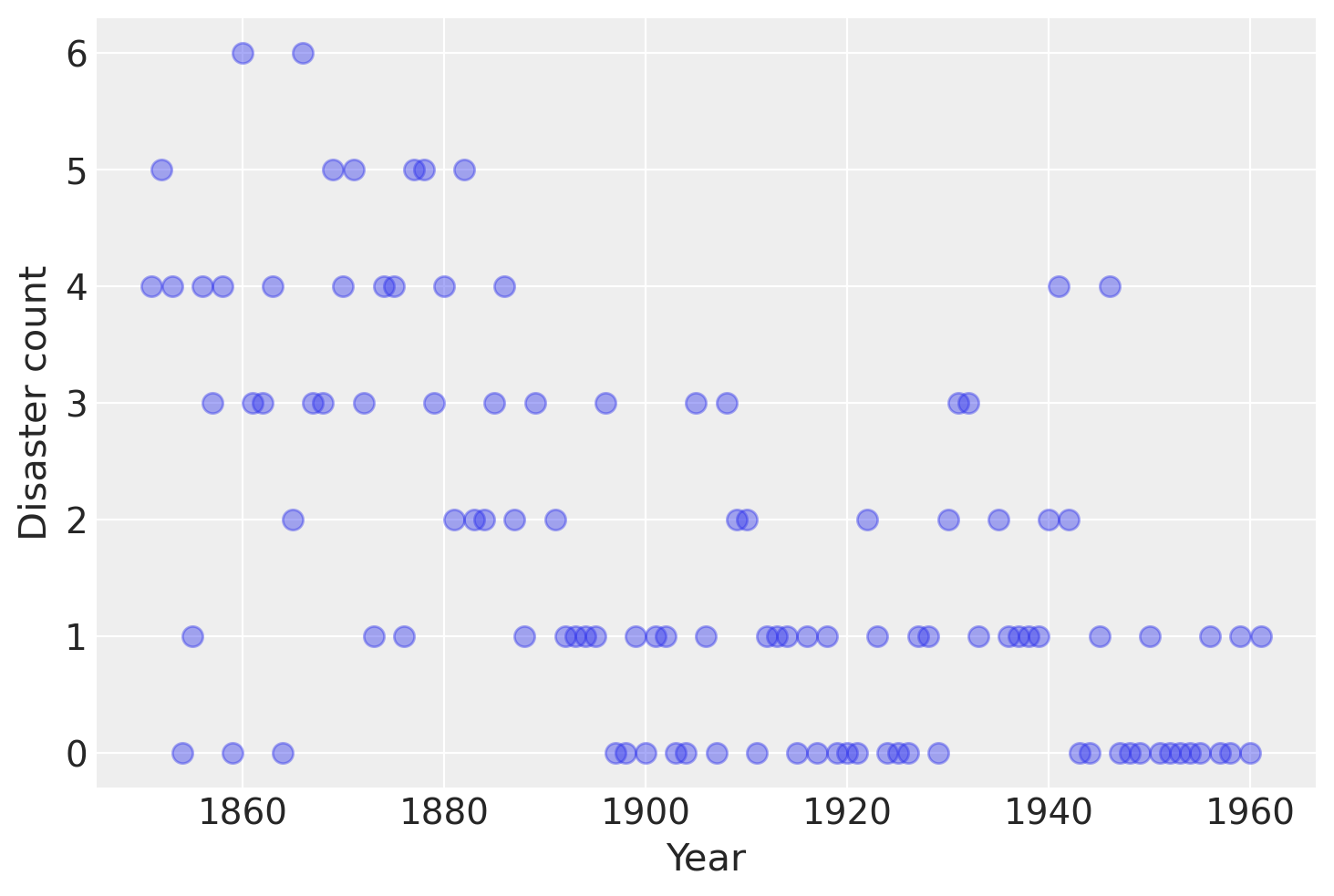

Case study 2: Coal mining disasters¶

Consider the following time series of recorded coal mining disasters in the UK from 1851 to 1962 (Jarrett, 1979). The number of disasters is thought to have been affected by changes in safety regulations during this period. Unfortunately, we also have pair of years with missing data, identified as missing by a nan in the pandas Series. These missing values will be automatically imputed by PyMC3.

Next we will build a model for this series and attempt to estimate when the change occurred. At the same time, we will see how to handle missing data, use multiple samplers and sample from discrete random variables.

import pandas as pd

# fmt: off

disaster_data = pd.Series(

[4, 5, 4, 0, 1, 4, 3, 4, 0, 6, 3, 3, 4, 0, 2, 6,

3, 3, 5, 4, 5, 3, 1, 4, 4, 1, 5, 5, 3, 4, 2, 5,

2, 2, 3, 4, 2, 1, 3, np.nan, 2, 1, 1, 1, 1, 3, 0, 0,

1, 0, 1, 1, 0, 0, 3, 1, 0, 3, 2, 2, 0, 1, 1, 1,

0, 1, 0, 1, 0, 0, 0, 2, 1, 0, 0, 0, 1, 1, 0, 2,

3, 3, 1, np.nan, 2, 1, 1, 1, 1, 2, 4, 2, 0, 0, 1, 4,

0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0, 1]

)

# fmt: on

years = np.arange(1851, 1962)

plt.plot(years, disaster_data, "o", markersize=8, alpha=0.4)

plt.ylabel("Disaster count")

plt.xlabel("Year");

Occurrences of disasters in the time series is thought to follow a Poisson process with a large rate parameter in the early part of the time series, and from one with a smaller rate in the later part. We are interested in locating the change point in the series, which perhaps is related to changes in mining safety regulations.

In our model,

the parameters are defined as follows:

\(D_t\): The number of disasters in year \(t\)

\(r_t\): The rate parameter of the Poisson distribution of disasters in year \(t\).

\(s\): The year in which the rate parameter changes (the switchpoint).

\(e\): The rate parameter before the switchpoint \(s\).

\(l\): The rate parameter after the switchpoint \(s\).

\(t_l\), \(t_h\): The lower and upper boundaries of year \(t\).

This model is built much like our previous models. The major differences are the introduction of discrete variables with the Poisson and discrete-uniform priors and the novel form of the deterministic random variable rate.

with pm.Model() as disaster_model:

switchpoint = pm.DiscreteUniform(

"switchpoint", lower=years.min(), upper=years.max(), testval=1900

)

# Priors for pre- and post-switch rates number of disasters

early_rate = pm.Exponential("early_rate", 1.0)

late_rate = pm.Exponential("late_rate", 1.0)

# Allocate appropriate Poisson rates to years before and after current

rate = pm.math.switch(switchpoint >= years, early_rate, late_rate)

disasters = pm.Poisson("disasters", rate, observed=disaster_data)

/Users/furnstah/opt/anaconda3/envs/8820-book-env-2/lib/python3.9/site-packages/pymc3/model.py:1755: ImputationWarning: Data in disasters contains missing values and will be automatically imputed from the sampling distribution.

warnings.warn(impute_message, ImputationWarning)

The logic for the rate random variable,

rate = switch(switchpoint >= year, early_rate, late_rate)

is implemented using switch, a Theano function that works like an if statement. It uses the first argument to switch between the next two arguments.

Missing values are handled transparently by passing a MaskedArray or a pandas.DataFrame with NaN values to the observed argument when creating an observed stochastic random variable. Behind the scenes, another random variable, disasters.missing_values is created to model the missing values.

Unfortunately because they are discrete variables and thus have no meaningful gradient, we cannot use NUTS for sampling switchpoint or the missing disaster observations. Instead, we will sample using a Metroplis step method, which implements adaptive Metropolis-Hastings, because it is designed to handle discrete values. PyMC3 automatically assigns the correct sampling algorithms.

with disaster_model:

trace = pm.sample(10000, return_inferencedata=False)

Multiprocess sampling (4 chains in 4 jobs)

CompoundStep

>CompoundStep

>>Metropolis: [disasters_missing]

>>Metropolis: [switchpoint]

>NUTS: [late_rate, early_rate]

Sampling 4 chains for 1_000 tune and 10_000 draw iterations (4_000 + 40_000 draws total) took 26 seconds.

The number of effective samples is smaller than 10% for some parameters.

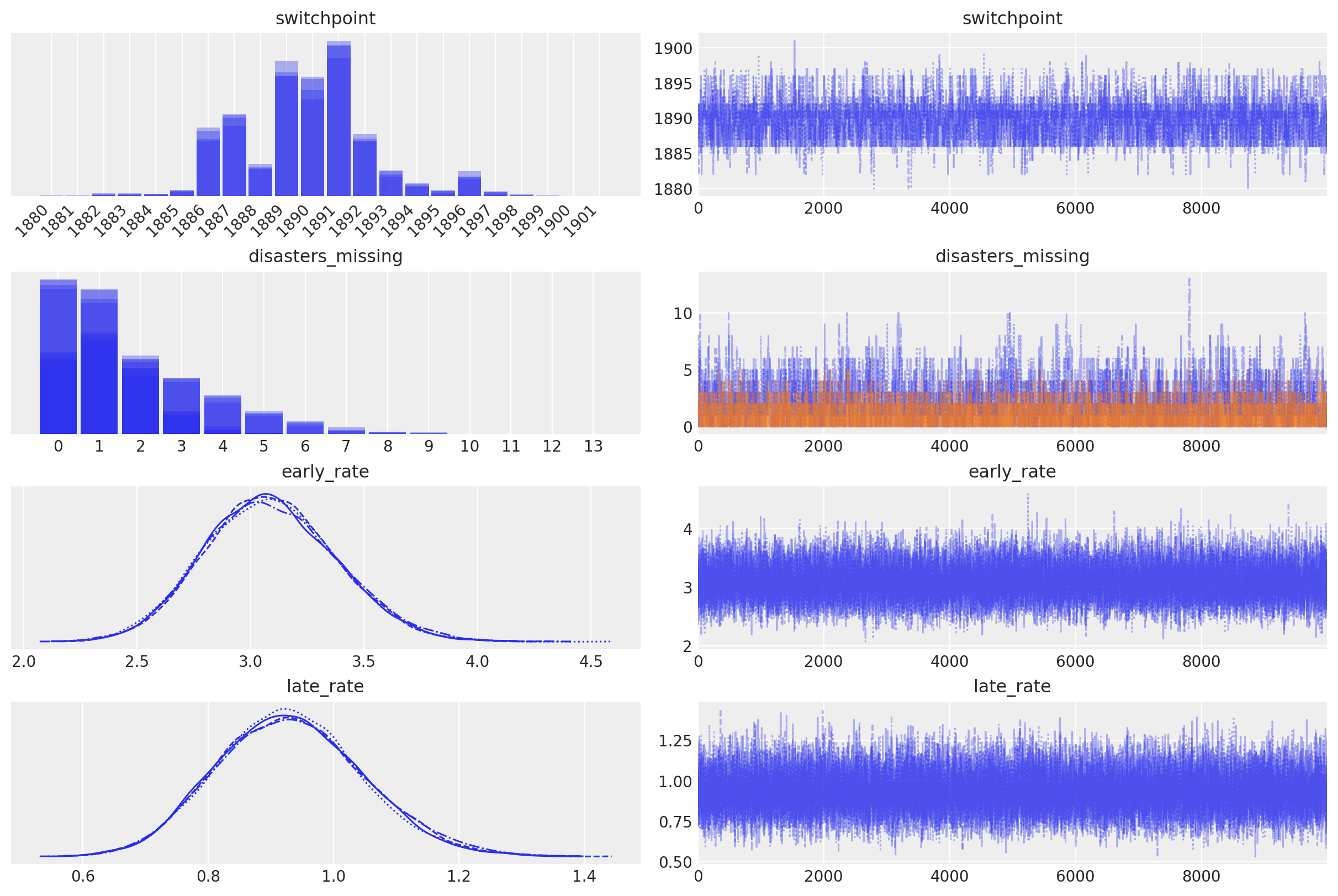

In the trace plot below we can see that there’s about a 10 year span that’s plausible for a significant change in safety, but a 5 year span that contains most of the probability mass. The distribution is jagged because of the jumpy relationship between the year switchpoint and the likelihood and not due to sampling error.

with disaster_model:

idata = az.from_pymc3(trace)

idata

-

- chain: 4

- draw: 10000

- disasters_missing_dim_0: 2

- chain(chain)int640 1 2 3

array([0, 1, 2, 3])

- draw(draw)int640 1 2 3 4 ... 9996 9997 9998 9999

array([ 0, 1, 2, ..., 9997, 9998, 9999])

- disasters_missing_dim_0(disasters_missing_dim_0)int640 1

array([0, 1])

- switchpoint(chain, draw)int641889 1889 1889 ... 1888 1889 1889

array([[1889, 1889, 1889, ..., 1891, 1890, 1890], [1886, 1886, 1886, ..., 1889, 1889, 1889], [1886, 1886, 1886, ..., 1889, 1890, 1891], [1891, 1892, 1890, ..., 1888, 1889, 1889]]) - disasters_missing(chain, draw, disasters_missing_dim_0)int640 0 0 0 0 0 0 0 ... 0 1 1 1 1 1 1 1

array([[[0, 0], [0, 0], [0, 0], ..., [3, 1], [2, 0], [2, 0]], [[1, 1], [1, 1], [2, 0], ..., [2, 0], [2, 0], [2, 0]], [[2, 5], [2, 5], [2, 5], ..., [0, 2], [0, 2], [0, 2]], [[5, 0], [5, 0], [4, 2], ..., [1, 1], [1, 1], [1, 1]]]) - early_rate(chain, draw)float642.941 3.386 3.036 ... 2.981 3.146

array([[2.94069786, 3.38614638, 3.03623639, ..., 3.00030778, 3.28101198, 2.90522615], [2.96954755, 2.71144892, 2.93879554, ..., 3.05399805, 3.31458526, 3.2882098 ], [2.58198258, 2.66418084, 3.09823652, ..., 3.00717607, 2.60455627, 3.04211718], [2.64580811, 2.64580811, 2.68759031, ..., 3.29370671, 2.98063796, 3.14627262]]) - late_rate(chain, draw)float641.012 0.8246 ... 0.9157 0.9413

array([[1.01190933, 0.82456358, 0.99345112, ..., 0.78847314, 1.06665983, 1.01937823], [1.10099215, 1.10714511, 0.95945159, ..., 0.72680296, 0.82369324, 1.0279594 ], [1.01782612, 1.23706245, 0.99525333, ..., 1.01266998, 0.82637763, 1.01999206], [0.97300267, 0.97300267, 0.95136109, ..., 1.11649429, 0.91567966, 0.9413464 ]])

- created_at :

- 2021-10-25T16:58:57.210704

- arviz_version :

- 0.11.2

- inference_library :

- pymc3

- inference_library_version :

- 3.11.2

- sampling_time :

- 26.108917951583862

- tuning_steps :

- 1000

<xarray.Dataset> Dimensions: (chain: 4, draw: 10000, disasters_missing_dim_0: 2) Coordinates: * chain (chain) int64 0 1 2 3 * draw (draw) int64 0 1 2 3 4 ... 9995 9996 9997 9998 9999 * disasters_missing_dim_0 (disasters_missing_dim_0) int64 0 1 Data variables: switchpoint (chain, draw) int64 1889 1889 1889 ... 1889 1889 disasters_missing (chain, draw, disasters_missing_dim_0) int64 0 .... early_rate (chain, draw) float64 2.941 3.386 ... 2.981 3.146 late_rate (chain, draw) float64 1.012 0.8246 ... 0.9413 Attributes: created_at: 2021-10-25T16:58:57.210704 arviz_version: 0.11.2 inference_library: pymc3 inference_library_version: 3.11.2 sampling_time: 26.108917951583862 tuning_steps: 1000xarray.Dataset -

- chain: 4

- draw: 10000

- disasters_dim_0: 111

- chain(chain)int640 1 2 3

array([0, 1, 2, 3])

- draw(draw)int640 1 2 3 4 ... 9996 9997 9998 9999

array([ 0, 1, 2, ..., 9997, 9998, 9999])

- disasters_dim_0(disasters_dim_0)int640 1 2 3 4 5 ... 106 107 108 109 110

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110])

- disasters(chain, draw, disasters_dim_0)float64-1.804 -2.335 ... -0.9413 -1.002

array([[[-1.80416401, -2.334955 , -1.80416401, ..., -1.00007036, -1.01190933, -1.00007036], [-1.68543016, -2.07517555, -1.68543016, ..., -1.01746461, -0.82456358, -1.01746461], [-1.77181535, -2.27063454, -1.77181535, ..., -1.00002154, -0.99345112, -1.00002154], ..., [-1.7835021 , -2.29422514, -1.7835021 , ..., -1.02613008, -0.78847314, -1.02613008], [-1.70645819, -2.1277442 , -1.70645819, ..., -1.00212772, -1.06665983, -1.00212772], [-1.81723504, -2.36016171, -1.81723504, ..., -1.00018537, -1.01937823, -1.00018537]], [[-1.79396298, -2.31499129, -1.79396298, ..., -1.00478042, -1.10099215, -1.00478042], [-1.89957015, -2.51152492, -1.89957015, ..., -1.00536038, -1.10714511, -1.00536038], [-1.8048501 , -2.3362882 , -1.8048501 , ..., -1.00084501, -0.95945159, -1.00084501], ... [-1.78122408, -2.28966053, -1.78122408, ..., -1.00007959, -1.01266998, -1.00007959], [-1.95356081, -2.6057364 , -1.95356081, ..., -1.01708106, -0.82637763, -1.01708106], [-1.76995615, -2.26684035, -1.76995615, ..., -1.00019722, -1.01999206, -1.00019722]], [[-1.93195577, -2.56841714, -1.93195577, ..., -1.00037112, -0.97300267, -1.00037112], [-1.93195577, -2.56841714, -1.93195577, ..., -1.00037112, -0.97300267, -1.00037112], [-1.91106416, -2.53185707, -1.91106416, ..., -1.00122268, -0.95136109, -1.00122268], ..., [-1.70370618, -2.1211305 , -1.70370618, ..., -1.00630061, -1.11649429, -1.00630061], [-1.79014236, -2.30744291, -1.79014236, ..., -1.00376835, -0.91567966, -1.00376835], [-1.73945262, -2.20267208, -1.73945262, ..., -1.00179049, -0.9413464 , -1.00179049]]])

- created_at :

- 2021-10-25T16:59:00.633518

- arviz_version :

- 0.11.2

- inference_library :

- pymc3

- inference_library_version :

- 3.11.2

<xarray.Dataset> Dimensions: (chain: 4, draw: 10000, disasters_dim_0: 111) Coordinates: * chain (chain) int64 0 1 2 3 * draw (draw) int64 0 1 2 3 4 5 ... 9994 9995 9996 9997 9998 9999 * disasters_dim_0 (disasters_dim_0) int64 0 1 2 3 4 5 ... 106 107 108 109 110 Data variables: disasters (chain, draw, disasters_dim_0) float64 -1.804 ... -1.002 Attributes: created_at: 2021-10-25T16:59:00.633518 arviz_version: 0.11.2 inference_library: pymc3 inference_library_version: 3.11.2xarray.Dataset -

- chain: 4

- draw: 10000

- accept_dim_0: 2

- accepted_dim_0: 2

- scaling_dim_0: 2

- chain(chain)int640 1 2 3

array([0, 1, 2, 3])

- draw(draw)int640 1 2 3 4 ... 9996 9997 9998 9999

array([ 0, 1, 2, ..., 9997, 9998, 9999])

- accept_dim_0(accept_dim_0)int640 1

array([0, 1])

- accepted_dim_0(accepted_dim_0)int640 1

array([0, 1])

- scaling_dim_0(scaling_dim_0)int640 1

array([0, 1])

- accept(chain, draw, accept_dim_0)float640.0 0.09958 0.0 ... 2.91 0.2096 1.0

array([[[0.00000000e+00, 9.95805028e-02], [0.00000000e+00, 7.53251093e-02], [0.00000000e+00, 1.00461720e-01], ..., [1.66039811e+00, 9.77077074e-02], [1.26814391e+00, 6.30710080e-01], [5.68881594e-01, 3.47203257e-01]], [[4.47924594e-01, 1.38266791e-02], [0.00000000e+00, 1.00000000e+00], [5.00000000e-01, 1.50833523e-03], ..., [3.74111419e-01, 8.50131131e-03], [2.64121270e-01, 4.98839079e-01], [0.00000000e+00, 1.00000000e+00]], [[1.17500396e-02, 7.34571538e-02], [1.00000000e+00, 1.00000000e+00], [2.69455542e-01, 1.00000000e+00], ..., [1.00000000e+00, 1.00000000e+00], [1.00000000e+00, 1.36080849e-01], [0.00000000e+00, 1.67825216e+00]], [[1.10078011e+00, 3.27216794e-03], [0.00000000e+00, 5.10451096e-01], [8.94560532e-01, 1.41139122e+00], ..., [2.71156378e+00, 6.07208651e-01], [0.00000000e+00, 2.91027423e+00], [2.09617310e-01, 1.00000000e+00]]]) - diverging(chain, draw)boolFalse False False ... False False

array([[False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False], [False, False, False, ..., False, False, False]]) - max_energy_error(chain, draw)float640.2895 0.2215 ... 1.655 0.06026

array([[ 0.28951445, 0.22151411, -0.21684037, ..., 0.3873115 , -0.30784524, 0.61351681], [ 0.56502066, 0.37225637, -0.63884475, ..., -0.58798532, -0.74379831, 2.7098768 ], [ 1.22297677, 0.40935673, -1.33967548, ..., -0.42577553, 0.85738842, 1.23979203], [ 0.66856396, 0.13130675, -0.08778635, ..., 0.34408414, 1.65511602, 0.06026039]]) - step_size_bar(chain, draw)float641.148 1.148 1.148 ... 1.151 1.151

array([[1.14796117, 1.14796117, 1.14796117, ..., 1.14796117, 1.14796117, 1.14796117], [1.09175002, 1.09175002, 1.09175002, ..., 1.09175002, 1.09175002, 1.09175002], [1.22266463, 1.22266463, 1.22266463, ..., 1.22266463, 1.22266463, 1.22266463], [1.1510901 , 1.1510901 , 1.1510901 , ..., 1.1510901 , 1.1510901 , 1.1510901 ]]) - tree_depth(chain, draw)int642 2 2 1 2 2 2 2 ... 2 1 2 2 2 2 2 2

array([[2, 2, 2, ..., 2, 2, 2], [2, 2, 1, ..., 2, 2, 2], [2, 1, 2, ..., 2, 2, 2], [2, 1, 1, ..., 2, 2, 2]]) - energy_error(chain, draw)float640.05689 0.09843 ... -0.02892

array([[ 0.0568922 , 0.09843318, -0.11708068, ..., 0.07989321, 0.1372081 , -0.18010912], [ 0.2405393 , 0.37225637, -0.63884475, ..., 0.15033145, -0.46891978, -0.33428635], [ 0.82355895, 0.40935673, -1.27690995, ..., -0.40856292, 0.73540757, -0.78171085], [ 0.41019542, 0. , -0.08778635, ..., -0.00851987, -0.16415884, -0.02892175]]) - process_time_diff(chain, draw)float640.000644 0.000582 ... 0.000533

array([[0.000644, 0.000582, 0.000762, ..., 0.000575, 0.000574, 0.00057 ], [0.000563, 0.000644, 0.000447, ..., 0.000538, 0.000555, 0.000539], [0.000668, 0.0003 , 0.000681, ..., 0.000975, 0.001067, 0.001032], [0.000797, 0.00043 , 0.000309, ..., 0.000529, 0.000529, 0.000533]]) - energy(chain, draw)float64176.4 176.6 176.0 ... 179.6 175.6

array([[176.38560843, 176.55948151, 176.03799899, ..., 177.5938614 , 176.93074978, 177.79185632], [177.94394602, 178.38031621, 178.1223869 , ..., 178.5723439 , 177.87557349, 180.92084515], [184.29753533, 185.47860281, 183.21087654, ..., 177.08393789, 180.1461978 , 181.33819069], [178.65163542, 179.43904661, 178.78216911, ..., 179.20354578, 179.61192775, 175.58066949]]) - n_steps(chain, draw)float643.0 3.0 3.0 1.0 ... 3.0 3.0 3.0 3.0

array([[3., 3., 3., ..., 3., 3., 3.], [3., 3., 1., ..., 3., 3., 3.], [3., 1., 3., ..., 3., 3., 3.], [3., 1., 1., ..., 3., 3., 3.]]) - perf_counter_start(chain, draw)float64541.4 541.4 541.4 ... 554.7 554.7

array([[541.41679606, 541.41792837, 541.41900368, ..., 553.16267788, 553.16375172, 553.16481862], [541.36001728, 541.36127756, 541.3624694 , ..., 553.20322195, 553.20425321, 553.2052746 ], [541.38808315, 541.38925325, 541.39002982, ..., 553.10401508, 553.10585831, 553.10762119], [542.88495318, 542.88644516, 542.88739759, ..., 554.6707312 , 554.67171086, 554.67269701]]) - accepted(chain, draw, accepted_dim_0)boolFalse False False ... False True

array([[[False, False], [False, False], [False, False], ..., [ True, False], [ True, True], [False, False]], [[False, False], [False, True], [ True, False], ..., [ True, False], [False, False], [False, True]], [[False, False], [ True, True], [False, True], ..., [ True, True], [ True, True], [False, True]], [[ True, False], [False, True], [ True, True], ..., [ True, True], [False, True], [False, True]]]) - lp(chain, draw)float64-175.8 -176.0 ... -175.5 -175.4

array([[-175.78888015, -175.95376756, -175.51348878, ..., -176.32493595, -176.87306161, -176.38068218], [-176.82183697, -178.05673181, -177.03121249, ..., -177.99365695, -176.82719116, -176.61003754], [-183.8683053 , -184.46302189, -181.51739388, ..., -176.34994662, -180.07162385, -178.21845241], [-178.11145087, -178.78391132, -178.22960597, ..., -177.84291856, -175.46030716, -175.37347629]]) - perf_counter_diff(chain, draw)float640.0006443 0.0005936 ... 0.0005326

array([[0.0006443 , 0.00059364, 0.00077686, ..., 0.00057418, 0.0005737 , 0.00057053], [0.0005616 , 0.00064398, 0.00045637, ..., 0.00053818, 0.00055497, 0.00053972], [0.00067909, 0.00029953, 0.00068097, ..., 0.00097437, 0.00106775, 0.00105367], [0.00080125, 0.00043038, 0.00030907, ..., 0.00052912, 0.00052862, 0.00053263]]) - scaling(chain, draw, scaling_dim_0)float641.464 2.144 1.464 ... 1.331 2.144

array([[[1.4641 , 2.14358881], [1.4641 , 2.14358881], [1.4641 , 2.14358881], ..., [1.4641 , 2.14358881], [1.4641 , 2.14358881], [1.4641 , 2.14358881]], [[1.331 , 3.22102 ], [1.331 , 3.22102 ], [1.331 , 3.22102 ], ..., [1.331 , 3.22102 ], [1.331 , 3.22102 ], [1.331 , 3.22102 ]], [[1.331 , 2.662 ], [1.331 , 2.662 ], [1.331 , 2.662 ], ..., [1.331 , 2.662 ], [1.331 , 2.662 ], [1.331 , 2.662 ]], [[1.331 , 2.14358881], [1.331 , 2.14358881], [1.331 , 2.14358881], ..., [1.331 , 2.14358881], [1.331 , 2.14358881], [1.331 , 2.14358881]]]) - acceptance_rate(chain, draw)float640.8281 0.842 1.0 ... 0.7772 0.9815

array([[0.82811365, 0.84204814, 1. , ..., 0.80923154, 0.96817314, 0.80257218], [0.75240172, 0.80085771, 1. , ..., 0.97076323, 1. , 0.86651098], [0.73964042, 0.66407729, 1. , ..., 0.98520429, 0.74480852, 0.84496631], [0.62466223, 0.87694873, 1. , ..., 0.84047195, 0.77716581, 0.98152133]]) - step_size(chain, draw)float641.069 1.069 1.069 ... 1.626 1.626

array([[1.06944462, 1.06944462, 1.06944462, ..., 1.06944462, 1.06944462, 1.06944462], [1.47296891, 1.47296891, 1.47296891, ..., 1.47296891, 1.47296891, 1.47296891], [0.94296666, 0.94296666, 0.94296666, ..., 0.94296666, 0.94296666, 0.94296666], [1.62616905, 1.62616905, 1.62616905, ..., 1.62616905, 1.62616905, 1.62616905]])

- created_at :

- 2021-10-25T16:58:57.222803

- arviz_version :

- 0.11.2

- inference_library :

- pymc3

- inference_library_version :

- 3.11.2

- sampling_time :

- 26.108917951583862

- tuning_steps :

- 1000

<xarray.Dataset> Dimensions: (chain: 4, draw: 10000, accept_dim_0: 2, accepted_dim_0: 2, scaling_dim_0: 2) Coordinates: * chain (chain) int64 0 1 2 3 * draw (draw) int64 0 1 2 3 4 5 ... 9995 9996 9997 9998 9999 * accept_dim_0 (accept_dim_0) int64 0 1 * accepted_dim_0 (accepted_dim_0) int64 0 1 * scaling_dim_0 (scaling_dim_0) int64 0 1 Data variables: (12/16) accept (chain, draw, accept_dim_0) float64 0.0 0.09958 ... 1.0 diverging (chain, draw) bool False False False ... False False max_energy_error (chain, draw) float64 0.2895 0.2215 ... 1.655 0.06026 step_size_bar (chain, draw) float64 1.148 1.148 1.148 ... 1.151 1.151 tree_depth (chain, draw) int64 2 2 2 1 2 2 2 2 ... 2 1 2 2 2 2 2 2 energy_error (chain, draw) float64 0.05689 0.09843 ... -0.02892 ... ... accepted (chain, draw, accepted_dim_0) bool False False ... True lp (chain, draw) float64 -175.8 -176.0 ... -175.5 -175.4 perf_counter_diff (chain, draw) float64 0.0006443 0.0005936 ... 0.0005326 scaling (chain, draw, scaling_dim_0) float64 1.464 ... 2.144 acceptance_rate (chain, draw) float64 0.8281 0.842 1.0 ... 0.7772 0.9815 step_size (chain, draw) float64 1.069 1.069 1.069 ... 1.626 1.626 Attributes: created_at: 2021-10-25T16:58:57.222803 arviz_version: 0.11.2 inference_library: pymc3 inference_library_version: 3.11.2 sampling_time: 26.108917951583862 tuning_steps: 1000xarray.Dataset -

- disasters_dim_0: 111

- disasters_dim_0(disasters_dim_0)int640 1 2 3 4 5 ... 106 107 108 109 110

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110])

- disasters(disasters_dim_0)float644.0 5.0 4.0 0.0 ... 0.0 1.0 0.0 1.0

array([ 4., 5., 4., 0., 1., 4., 3., 4., 0., 6., 3., 3., 4., 0., 2., 6., 3., 3., 5., 4., 5., 3., 1., 4., 4., 1., 5., 5., 3., 4., 2., 5., 2., 2., 3., 4., 2., 1., 3., nan, 2., 1., 1., 1., 1., 3., 0., 0., 1., 0., 1., 1., 0., 0., 3., 1., 0., 3., 2., 2., 0., 1., 1., 1., 0., 1., 0., 1., 0., 0., 0., 2., 1., 0., 0., 0., 1., 1., 0., 2., 3., 3., 1., nan, 2., 1., 1., 1., 1., 2., 4., 2., 0., 0., 1., 4., 0., 0., 0., 1., 0., 0., 0., 0., 0., 1., 0., 0., 1., 0., 1.])

- created_at :

- 2021-10-25T16:59:00.634784

- arviz_version :

- 0.11.2

- inference_library :

- pymc3

- inference_library_version :

- 3.11.2

<xarray.Dataset> Dimensions: (disasters_dim_0: 111) Coordinates: * disasters_dim_0 (disasters_dim_0) int64 0 1 2 3 4 5 ... 106 107 108 109 110 Data variables: disasters (disasters_dim_0) float64 4.0 5.0 4.0 0.0 ... 1.0 0.0 1.0 Attributes: created_at: 2021-10-25T16:59:00.634784 arviz_version: 0.11.2 inference_library: pymc3 inference_library_version: 3.11.2xarray.Dataset

with disaster_model:

axes_arr = az.plot_trace(trace)

plt.draw()

for ax in axes_arr.flatten():

if ax.get_title() == "switchpoint":

labels = [label.get_text() for label in ax.get_xticklabels()]

ax.set_xticklabels(labels, rotation=45, ha="right")

break

plt.draw()

Note that the rate random variable does not appear in the trace. That is fine in this case, because it is not of interest in itself. However, if there is a deterministic random variable that one does want to see in the trace, this can be achieved by putting the definition of that variable into pm.Deterministic, and giving it a name, as follows:

rate = pm.Deterministic("rate", pm.math.switch(switchpoint >= years, early_rate, late_rate))

For more details, see the API documentation.

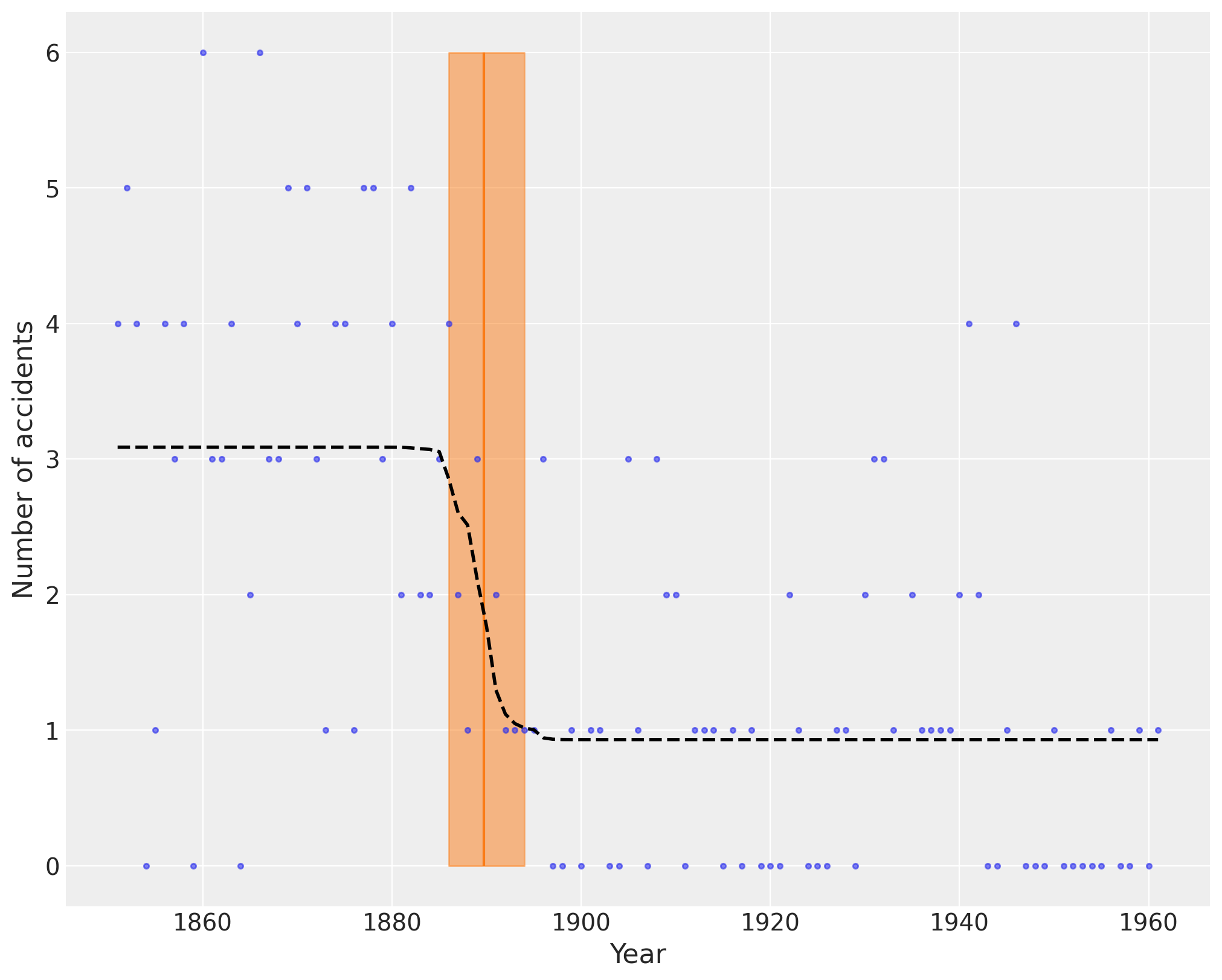

The following plot shows the switch point as an orange vertical line, together with its HPD as a semitransparent band. The dashed black line shows the accident rate.

plt.figure(figsize=(10, 8))

plt.plot(years, disaster_data, ".", alpha=0.6)

plt.ylabel("Number of accidents", fontsize=16)

plt.xlabel("Year", fontsize=16)

plt.vlines(trace["switchpoint"].mean(), disaster_data.min(), disaster_data.max(), color="C1")

average_disasters = np.zeros_like(disaster_data, dtype="float")

for i, year in enumerate(years):

idx = year < trace["switchpoint"]

average_disasters[i] = np.mean(np.where(idx, trace["early_rate"], trace["late_rate"]))

sp_hpd = az.hdi(trace["switchpoint"])

plt.fill_betweenx(

y=[disaster_data.min(), disaster_data.max()],

x1=sp_hpd[0],

x2=sp_hpd[1],

alpha=0.5,

color="C1",

)

plt.plot(years, average_disasters, "k--", lw=2);

Arbitrary deterministics¶

Due to its reliance on Theano, PyMC3 provides many mathematical functions and operators for transforming random variables into new random variables. However, the library of functions in Theano is not exhaustive, therefore Theano and PyMC3 provide functionality for creating arbitrary Theano functions in pure Python, and including these functions in PyMC models. This is supported with the as_op function decorator.

Theano needs to know the types of the inputs and outputs of a function, which are specified for as_op by itypes for inputs and otypes for outputs. The Theano documentation includes an overview of the available types.

import theano.tensor as tt

from theano.compile.ops import as_op

@as_op(itypes=[tt.lscalar], otypes=[tt.lscalar])

def crazy_modulo3(value):

if value > 0:

return value % 3

else :

return (-value + 1) % 3

with pm.Model() as model_deterministic:

a = pm.Poisson('a', 1)

b = crazy_modulo3(a)

An important drawback of this approach is that it is not possible for theano to inspect these functions in order to compute the gradient required for the Hamiltonian-based samplers. Therefore, it is not possible to use the HMC or NUTS samplers for a model that uses such an operator. However, it is possible to add a gradient if we inherit from theano.Op instead of using as_op. The PyMC example set includes a more elaborate example of the usage of as_op.

Arbitrary distributions¶

Similarly, the library of statistical distributions in PyMC3 is not exhaustive, but PyMC3 allows for the creation of user-defined functions for an arbitrary probability distribution. For simple statistical distributions, the DensityDist function takes as an argument any function that calculates a log-probability \(log(p(x))\). This function may employ other random variables in its calculation. Here is an example inspired by a blog post by Jake Vanderplas on which priors to use for a linear regression (Vanderplas, 2014).

import theano.tensor as tt

with pm.Model() as model:

alpha = pm.Uniform('intercept', -100, 100)

# Create custom densities

beta = pm.DensityDist('beta', lambda value: -1.5 * tt.log(1 + value**2), testval=0)

eps = pm.DensityDist('eps', lambda value: -tt.log(tt.abs_(value)), testval=1)

# Create likelihood

like = pm.Normal('y_est', mu=alpha + beta * X, sigma=eps, observed=Y)

For more complex distributions, one can create a subclass of Continuous or Discrete and provide the custom logp function, as required. This is how the built-in distributions in PyMC are specified. As an example, fields like psychology and astrophysics have complex likelihood functions for a particular process that may require numerical approximation. In these cases, it is impossible to write the function in terms of predefined theano operators and we must use a custom theano operator using as_op or inheriting from theano.Op.

Implementing the beta variable above as a Continuous subclass is shown below, along with a sub-function.

class Beta(pm.Continuous):

def __init__(self, mu, *args, **kwargs):

super(Beta, self).__init__(*args, **kwargs)

self.mu = mu

self.mode = mu

def logp(self, value):

mu = self.mu

return beta_logp(value - mu)

def beta_logp(value):

return -1.5 * np.log(1 + (value)**2)

with pm.Model() as model:

beta = Beta('slope', mu=0, testval=0)

If your logp can not be expressed in Theano, you can decorate the function with as_op as follows: @as_op(itypes=[tt.dscalar], otypes=[tt.dscalar]). Note, that this will create a blackbox Python function that will be much slower and not provide the gradients necessary for e.g. NUTS.

Generalized Linear Models¶

Generalized Linear Models (GLMs) are a class of flexible models that are widely used to estimate regression relationships between a single outcome variable and one or multiple predictors. Because these models are so common, PyMC3 offers a glm submodule that allows flexible creation of various GLMs with an intuitive R-like syntax that is implemented via the patsy module.

The glm submodule requires data to be included as a pandas DataFrame. Hence, for our linear regression example:

# Convert X and Y to a pandas DataFrame

df = pd.DataFrame({'x1': X1, 'x2': X2, 'y': Y})

The model can then be very concisely specified in one line of code.

from pymc3.glm import GLM

with pm.Model() as model_glm:

GLM.from_formula('y ~ x1 + x2', df)

trace = pm.sample()

/var/folders/_s/v5m4jp4x2y332nzvt9zn1qxr0000gn/T/ipykernel_76993/351848644.py:5: FutureWarning: In v4.0, pm.sample will return an `arviz.InferenceData` object instead of a `MultiTrace` by default. You can pass return_inferencedata=True or return_inferencedata=False to be safe and silence this warning.

trace = pm.sample()

Auto-assigning NUTS sampler...

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [sd, x2, x1, Intercept]

Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 13 seconds.

The error distribution, if not specified via the family argument, is assumed to be normal. In the case of logistic regression, this can be modified by passing in a Binomial family object.

from pymc3.glm.families import Binomial

df_logistic = pd.DataFrame({'x1': X1, 'y': Y > np.median(Y)})

with pm.Model() as model_glm_logistic:

GLM.from_formula('y ~ x1', df_logistic, family=Binomial())

For a more complete and flexible formula interface, including hierarchical GLMs, see Bambi.

Discussion¶

Probabilistic programming is an emerging paradigm in statistical learning, of which Bayesian modeling is an important sub-discipline. The signature characteristics of probabilistic programming–specifying variables as probability distributions and conditioning variables on other variables and on observations–makes it a powerful tool for building models in a variety of settings, and over a range of model complexity. Accompanying the rise of probabilistic programming has been a burst of innovation in fitting methods for Bayesian models that represent notable improvement over existing MCMC methods. Yet, despite this expansion, there are few software packages available that have kept pace with the methodological innovation, and still fewer that allow non-expert users to implement models.

PyMC3 provides a probabilistic programming platform for quantitative researchers to implement statistical models flexibly and succinctly. A large library of statistical distributions and several pre-defined fitting algorithms allows users to focus on the scientific problem at hand, rather than the implementation details of Bayesian modeling. The choice of Python as a development language, rather than a domain-specific language, means that PyMC3 users are able to work interactively to build models, introspect model objects, and debug or profile their work, using a dynamic, high-level programming language that is easy to learn. The modular, object-oriented design of PyMC3 means that adding new fitting algorithms or other features is straightforward. In addition, PyMC3 comes with several features not found in most other packages, most notably Hamiltonian-based samplers as well as automatical transforms of constrained random variables which is only offered by STAN. Unlike STAN, however, PyMC3 supports discrete variables as well as non-gradient based sampling algorithms like Metropolis-Hastings and Slice sampling.

Development of PyMC3 is an ongoing effort and several features are planned for future versions. Most notably, variational inference techniques are often more efficient than MCMC sampling, at the cost of generalizability. More recently, however, black-box variational inference algorithms have been developed, such as automatic differentiation variational inference (ADVI; Kucukelbir et al., 2017). This algorithm is slated for addition to PyMC3. As an open-source scientific computing toolkit, we encourage researchers developing new fitting algorithms for Bayesian models to provide reference implementations in PyMC3. Since samplers can be written in pure Python code, they can be implemented generally to make them work on arbitrary PyMC3 models, giving authors a larger audience to put their methods into use.

References¶

Patil, A., D. Huard and C.J. Fonnesbeck. (2010) PyMC: Bayesian Stochastic Modelling in Python. Journal of Statistical Software, 35(4), pp. 1-81

Bastien, F., Lamblin, P., Pascanu, R., Bergstra, J., Goodfellow, I., Bergeron, A., Bouchard, N., Warde-Farley, D., and Bengio, Y. (2012) “Theano: new features and speed improvements”. NIPS 2012 deep learning workshop.

Bergstra, J., Breuleux, O., Bastien, F., Lamblin, P., Pascanu, R., Desjardins, G., Turian, J., Warde-Farley, D., and Bengio, Y. (2010) “Theano: A CPU and GPU Math Expression Compiler”. Proceedings of the Python for Scientific Computing Conference (SciPy) 2010. June 30 - July 3, Austin, TX

Lunn, D.J., Thomas, A., Best, N., and Spiegelhalter, D. (2000) WinBUGS – a Bayesian modelling framework: concepts, structure, and extensibility. Statistics and Computing, 10:325–337.

Neal, R.M. Slice sampling. Annals of Statistics. (2003). doi:10.2307/3448413.

van Rossum, G. The Python Library Reference Release 2.6.5., (2010). URL http://docs.python.org/library/.

Duane, S., Kennedy, A. D., Pendleton, B. J., and Roweth, D. (1987) “Hybrid Monte Carlo”, Physics Letters, vol. 195, pp. 216-222.

Stan Development Team. (2014). Stan: A C++ Library for Probability and Sampling, Version 2.5.0. http://mc-stan.org.

Gamerman, D. Markov Chain Monte Carlo: statistical simulation for Bayesian inference. Chapman and Hall, 1997.

Hoffman, M. D., & Gelman, A. (2014). The No-U-Turn Sampler: Adaptively Setting Path Lengths in Hamiltonian Monte Carlo. The Journal of Machine Learning Research, 30.

Kucukelbir A, Dustin Tran, Ranganath R, Gelman A, and Blei DM. Automatic differentiation variational inference http://arxiv.org/abs/1506.03431, The Journal of Machine Learning Research. 18 , pp. 430-474 .

Vanderplas, Jake. “Frequentism and Bayesianism IV: How to be a Bayesian in Python.” Pythonic Perambulations. N.p., 14 Jun 2014. Web. 27 May. 2015. https://jakevdp.github.io/blog/2014/06/14/frequentism-and-bayesianism-4-bayesian-in-python/.

R.G. Jarrett. A note on the intervals between coal mining disasters. Biometrika, 66:191–193, 1979.